With AI having been on the rise for the last few years, school life for teachers and students alike has changed. AI can summarize books without the student ever having to read the book, can write essays without lifting a finger, can solve complex math problems in no time, can create images and music without any creativity.

That then begs the question: When is it acceptable to use AI assistance, and where do students and teachers alike draw the line? When does the use of AI start to hinder instead of help in relation to the classroom? What are the limits?

”I think its wrong if you’re not actually thinking,” said sophomore Molly Edwards, “and you just take a picture of your work and you put into AI instead of actually thinking but I think it’s fine if you’re using it to check your answers or to fix your answers.”

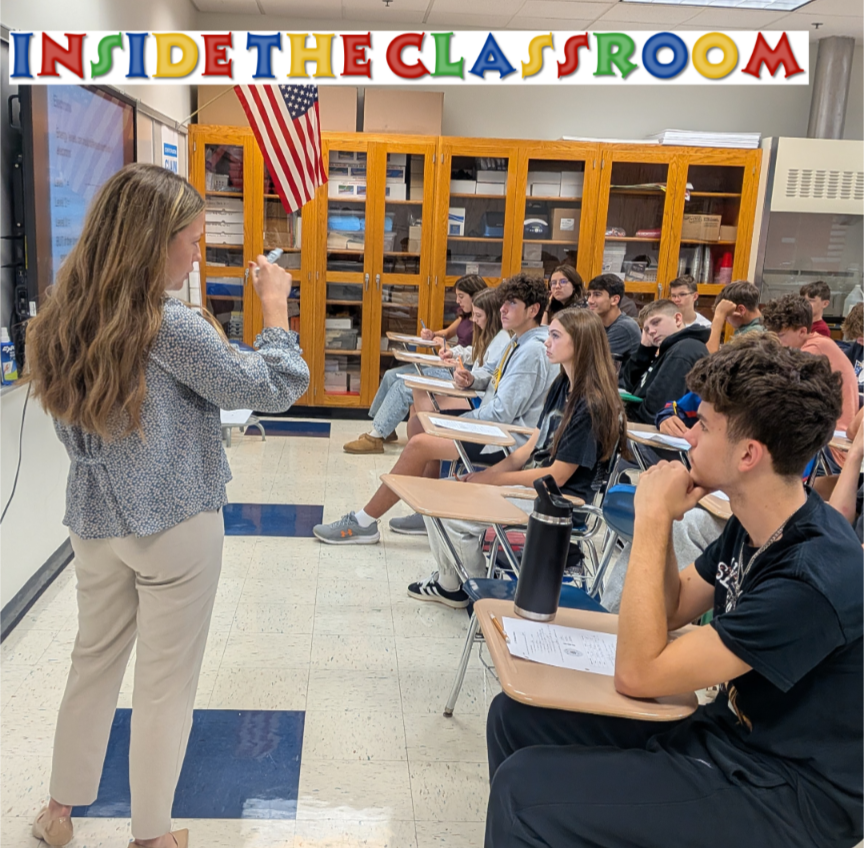

Norwin hasn’t been exempt from these changes; some teachers are running with generative AI and trying to use it in the best way possible, while others do their best to try to curb its rise in their classrooms.

“Students in my English classes regularly use AI tools for when we’re writing a research paper, said College Writing teacher Brian Fleckenstein. “We use the generative AI to help create the work side page. We also use Grammarly on a regular basis to clean things up however, it is unacceptable students of trying to use AI to do their writing for them entirely creating an essay completely without their input.”

According to Miriam-Webster, generative AI is “artificial intelligence (see ARTIFICIAL INTELLIGENCE sense 2) that is capable of generating new content (such as images or text) in response to a submitted prompt (such as a query) by learning from a large reference database of examples.”

Even though we only tend to think of image generation when we hear the words “generative AI” Chat GPT, Grok, and even Snapchat AI fall under this banner, as well as school tools such as MagicSchool AI.

I think it’s very specific to you know,” said the Introduction to AI teacher Ray Rakvic “Whatever curriculum is being taught whether it should be permitted or not permitted, whether the content is something that should be authentic from the student in that case, then it should be originated. All materials should originate from the student and other situations it can be very valuable.”

One of the pitfalls of AI in the classroom is that it encourages students to take shortcuts in all aspects of the classroom. However, teachers can tell more often than not when AI has been used.

“The AI,” said the 21st-century learning facilitator Jared Shultz, “you can tell by the language if you know the student well enough, and that’s not how they typically write or how they sound, also with just the way that it looks on paper. You can tell the way the responses come out. You can tell if it’s too wordy or not wordy enough or not a topic or an answer. That’s the easiest way.”

It should also be important to note that, according to the terms of use of some AI programs, many students are actually too young to be using the service. For example, with ChatGPT, it is 13 plus with parental permission or teacher supervision, and 18 plus otherwise.

If used correctly and with teacher supervision, AI can be a good way to check your answers and offer free tutoring; however, anyone who uses it should double-check the accuracy and the source of where the AI got the information.