Are standardized tests an artifact?

In late March, MIT reinstated their standardized test requirement for the admissions process, prompting debate over the future of the SAT and ACT.

May 31, 2022

“We believe a requirement is more equitable and transparent than a test-optional policy.” – Massachusetts Institute of Technology, March 28, 2022

For thousands of hopeful teenagers, MIT’s decision came as a shock. Three years, three classes had come and gone, and standardized tests were nearly an artifact. Some had expected them to make a return after the initial panic of the COVID-19 pandemic, but after subsequent postponements, the tide had shifted. It wasn’t a safety issue anymore; it was a matter of effectiveness. It was a national conversation about whether or not the SAT and ACT, staples of college admissions for several decades, were even necessary anymore. Were they truly equitable? Did they actually measure aptitude? Could they reasonably predict success in college? Expert after expert said no.

Out of the absence, MIT’s dissension rang loud and clear. They were one of the first major universities to reinstate their standardized test requirements, a decision that was met with a chorus of mixed feedback. As loud as the Institute’s defenders were in recognizing the necessity of a standardized number, opponents of the move were just as fierce, decrying a precedent that could potentially raise socioeconomic barriers to education for years to come.

Nonetheless, MIT is one of the largest universities in the world, as well as one of the most influential voices in the sphere of education, and although the policy is only in place “for the foreseeable future,” many doubt that it will be changing any time soon.

“I think it’s not so surprising that MIT, and I suspect some other private universities, will right now return to using the tests because their initial motivation for stopping their use is no longer imminent,” said Zachary Bleemer, a researcher at Harvard University.

The bigger question is this: will others follow suit? Over a month has passed since MIT’s initial announcement, and no other major universities have joined in reinstating their requirements. Still, some experts predict that a wave-like effect will take place, causing college admissions to return to a “pre-COVID” look. It remains to be seen whether this is in the works, but the bottom line is this: college admissions is at a crossroads, and the futures of millions hang in the balance.

How did we get here?

Before the SAT and ACT became behemoths, being administered to over 3 million students annually, the idea of standardized testing was a new concept in the mid 19th century, created in order to monitor the progress of another recent revelation, public schools. The 1800s saw a dramatic increase in the accessibility of education, in part due to a more equitable distribution of resources and opportunities, and the idea of “learning” underwent a drastic transformation. While it had previously been left up to individual institutions to decide what was and wasn’t taught, the development of statewide curriculum made such decisions more or less uniform, hence the need for a standardized number to serve as a “progress check.” In addition to identifying which schools were performing their duties adequately, standardized tests were able to single out pupils who needed extra help, most of whom had been swept under the rug for years.

In fact, the idea of standardization being used as a ranking — or, more accurately, a barrier — only got its start with psychologist Henry Goddard, who made the concept of intelligence testing mainstream in the United States. A member of the Ohio Committee on the Sterilization of the Feeble Minded, he campaigned for the widespread use of test results in all facets of society, including a court of law, and the craze of intellectual superiority that he started led to rapid growth in the number and utilization of standardized tests across the country. Stanford University Professor Lewis Terman’s Intelligence Quotient, or IQ, was developed in the early 20th century, and its embracement by the eugenics movement — an ideology later welcomed by the Nazis — gave the world its first taste of what could happen if these numbers found themselves in the wrong hands.

Similar concerns have muddied the history of post-secondary admissions; the College Entrance Examination Board, started in 1900 by the presidents of Columbia University and Harvard University, has often faced criticism for its racist origins and motivations, including its hiring of well-known eugenicist and author of A Study of American Intelligence, Carl Brigham, to design the Scholastic Aptitude Test (SAT). Brigham, who believed in the superiority of the “Nordic Race,” made several complaints about the depreciation of American education due to the influx of non-white citizens, claiming that the situation would “proceed with an accelerating rate as the racial mixture becomes more and more extensive.”

Thus, the SAT remains one of the most controversial parts of college admissions, as many claim that it promotes racial bias and a false reflection of the American dream. Journalist Mariana Rivera writes: “[The SAT] neatly aligns with the illusion of America’s meritocratic tradition: those who work the hardest will reap the greatest benefits, never mind structural inequality. But studies have proven, time and again, that standardized tests are much better at revealing things like household income, race, and level of parental education than they are at predicting the success of students in college classrooms.”

One of the biggest contributing factors to this concern has been the overuse of scores, and their perceived position as the “be all end all” of schooling; a study by the US Bureau of Education in the 1920s revealed that “testing was used to place students—in nearly all elementary schools and most high schools in urban areas—in groups of like abilities and channel them along predetermined educational paths.” This was even further accentuated by the growth of technology in the following decades, which brought unprecedented convenience to the administration and scoring of these tests.

The government embraced it, too: Lyndon B. Johnson’s Elementary and Secondary Education Act and George W. Bush’s No Child Left Behind Act both placed standardized testing at the forefront of assessing student achievement, using them to hold schools accountable for their education. The No Child Left Behind Act (NCLB for short) was particularly criticized in the years after it was passed, with many believing that it had an overall negative impact on American education, and several studies showing that schools were now placing too much emphasis on standardized test results. It was even theorized that the average student takes over 100 standardized tests in one school year.

For the critics, 2020 was a breath of fresh air. Lockdowns made it nearly impossible for some students to even take standardized tests, much less perform to the best of their ability. Nearly every college in the country implemented test-optional policies, and there was little debate over the practicality or the effectiveness of such a landmark decision.

Two years later, the picture looks a lot different. As the impact from COVID-19 starts to settle, it has become clear that most everyone’s intention is to return to the past, or perhaps a brighter future. Colleges, however, remain reluctant to reinstate standardized tests, once a formality, and the reasons aren’t so simple.

Where are we now?

College admissions is in an awkward place in terms of standardized testing. In the face of growing disagreement, institutions are gathering advice from countless experts, and they are receiving a mountain of diverse feedback.

For the proponents of test-optional policies, one of the largest concerns is bias. Starting with the hefty price tag attached to these tests, poorer students are shown to be at a disadvantage when it comes to scores. In a study conducted by Forbes’ Mark Kantrowitz, students whose family income totaled below $50,000 were only half as likely to reach a score of 1400 or more compared to those whose family income was above $100,000. White students were also shown to be twice as likely to reach a score of 1400 or more compared to Hispanic students, and three times as likely to reach the same score compared to black or African-American students. This can happen for a multitude of reasons, such as the fact that more affluent students can often afford to pay for test-specific tutoring, or, at the most extreme end of the spectrum, fraudulent results.

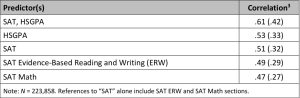

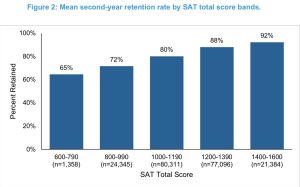

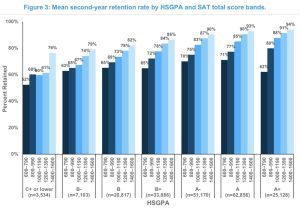

In addition to bias, many experts believe that the tests simply aren’t effective anymore. Universities admit students they predict will thrive in a college environment, and in many cases, the SAT and ACT have been shown to be poor indicators of postsecondary success. In a study by PACE (Policy Analysis for California Education), grades, not standardized tests, were found to be the best predictor of both first year grade point averages and second year persistence rates (the percentage of students who remain enrolled in a program after their first year of college). A study from the College Board found similar results: high school GPA was shown to have a higher correlation with first year college GPA than SAT results.

In a test-optional world, the results are supposed to reflect this science. Without biased, ineffective indicators, diversity in applicant pools is supposed to flourish, allowing qualified students to pursue success without arbitrary barriers holding them back. Indeed, many took advantage of these policies; in the 21-22 admissions cycle, only 51 percent of students submitted standardized test scores, according to the Common Application.

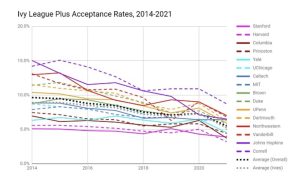

It’s ultimately questionable, though, whether such a trend is helping college admissions. Since test-optional policies have gone into effect, applications have been skyrocketing, tanking acceptance rates across the board, especially at the most selective schools. From the 19-20 admissions cycle to the 20-21 cycle, every Ivy League university took a downturn in their acceptance rates, spurred by an unusually high number of applications. Harvard serves as a strong example: after they removed their standardized test requirements in 2020, their applications skyrocketed from 40,248 to 57,786, pushing their acceptance rate down to a record low 3.2 percent. This is a trend that’s sweeping the nation; without an objective qualifier that would otherwise hold them below the marks of a “typical student,” thousands now feel comfortable applying to top-tier colleges, making them even more competitive than they once were.

Students are also applying in greater numbers through Early Decision, a program that gives applicants who have decided on a clear first-choice school the opportunity to apply at an earlier deadline. These applicant pools are often smaller, and they are typically accepted at higher rates, but if a student is accepted early at a school, they are required to attend there, and they must do so without having the opportunity to weigh financial aid packages from other schools. Many prestigious universities are filling up their classes this way; Boston University accepted 50 percent of their total admitted applicants through Early Decision this year, compared to 13 percent just a decade earlier.

“Early decision leaves students with the impression that there’s only one right college for them,” said Jeffrey Selingo of the Atlantic. “For some, early decision has become the new regular decision.”

In light of these trends, wide acceptance of test-optional policies has waned recently. Several critics are citing evidence showing that contrary to popular belief, standardized tests can be effective if used the right way. Take, for example, the College Board study that showed how grades were more highly correlated with college success than standardized test results; this same study also found that when weighed together, the two factors had a higher correlation with first year college GPA than either factor weighed separately.

Proponents of standardized tests have also claimed that while exams like the SAT and ACT can certainly show their own bias, other factors of college admissions, particularly those used by colleges that flaunt a “holistic admissions” process, are just as susceptible to socioeconomic influence. Extracurricular activities, especially sports, often require a sizable financial commitment, as well as the support of one or more parents, neither of which can be found in some households. Additionally, some applicants can afford to pay for “essay coaching” to perfectly craft their personal statements, a resource that many disadvantaged students simply don’t have access to.

Many have even brought objections to the measure that colleges seem to place the most emphasis on: the grade point average. While test-optional proponents laud it for its seemingly higher effectiveness, there is a sizable amount of conflicting evidence, in large part due to the phenomenon known as grade inflation.

It’s as simple as it sounds: over the last few decades, median grades in high schools have risen, making it easier to get a higher GPA. While it sounds convenient that every student could get a 4.0, just as in the real world, inflation devalues the mark, leading some to interpret it as an unreliable measure. In a study of North Carolina high schools published by the Thomas B. Fordham Institute, more than one third of students in Algebra 1 who received a “B,” or a proficient grade, failed to match it with a proficient mark on their end of course standardized exam. In fact, over 90 percent of parents in the study believed that their child was performing at or above grade level, while less than 50 percent were actually prepared for college.

Some have even found this to be present at Norwin.

“Since it’s difficult for colleges to compare different styles of grading from high school to high school — for example, how many free participation points a teacher gives — we have to take so many standards-based tests like the SAT, ACT, etc.,” said Nicholas Cormas, the current valedictorian of the junior class. “It also makes aspects like class rank and GPA worth less and less each year for college applications, resulting in a lot more stress because we feel the pressure to stand out with abnormal extracurriculars or ‘special’ essays.”

Even more alarmingly, while median GPAs have risen by 0.27 points in affluent schools, they have only risen 0.17 points in less affluent schools. Grade inflation is far more prevalent in schools with more resources, bringing concerns of bias to the measure of GPA.

“Unfortunately, it’s getting harder and harder to assume that an A truly represents excellence,” the Thomas B. Fordham Institute’s report reads. “And that’s a real problem for the future of individual kids and the nation they live in.”

Of course, such a devaluation reduces the power of grades to be able to accurately and impartially predict success in college, and it raises the question: does college admissions have a fair measure that allows them to fill out a class effectively? Seemingly every approach has its pros and cons, but nothing truly rises to the status of “be all end all.” Perhaps that’s where we’ve gotten stuck for so long.

It’s certainly where we find ourselves at the moment. Like every modern debate, there’s no consensus for how college admissions should proceed from here. One of the main reasons MIT cited for bringing back their standardized test requirement was in order to “better assess the academic preparedness of all applicants, and also help us identify socioeconomically disadvantaged students who lack access to advanced coursework or other enrichment opportunities that would otherwise demonstrate their readiness for MIT,” logic that, while defensible, seems to fly in the face of critics.

As far as student opinion, the results are somewhat decisive in the other direction — over 80 percent of Norwin students in a recent survey answered that students should not be required to submit standardized test results on college applications, and over 90 percent answered that they were unfair for some groups of the population.

“The tests are extremely expensive, which discriminates against poorer families,” wrote an anonymous respondent. “I’d love to take the SAT’s six or seven times, but I just don’t have the money.”

As far as actual data, it remains to be seen — likely because of the recency of such debates — whether test-optional policies were truly effective in achieving diversity, although one study by the American Educational Research Journal found that they only resulted in a one percent increase in the amount of Black, Latino and Native American students at 100 colleges. Until more data is explored and released, decisions will likely be based on the judgements of top officials, as well as the statistics they choose to observe.

Right now, those decisions are all but hidden to the general public. There could be dozens of universities planning to follow in MIT’s footsteps, bringing an end to the test-optional age, or perhaps higher education will present a unified front against the SAT and ACT, signaling that the changes brought on by COVID-19 are all but permanent. Standardized tests could be making a comeback, but they could just as easily find themselves on the wrong side of history. In any case, however, their fate looms large, as it represents the collective fate of students for years to come.